Ninja AI's SuperAgent is setting a new benchmark for what an AI system can achieve. By combining cutting-edge inference level optimization with multi-model orchestration and critique-based refinement, SuperAgent is delivering results that outperform even the most popular foundational models like GPT-4o, Gemini 1.5 Pro, and Claude Sonnet 3.5.

Ninja achieved SOTA in Arena-Hard benchmark, which we will discuss in this blog post, along with its performance in other benchmarks.

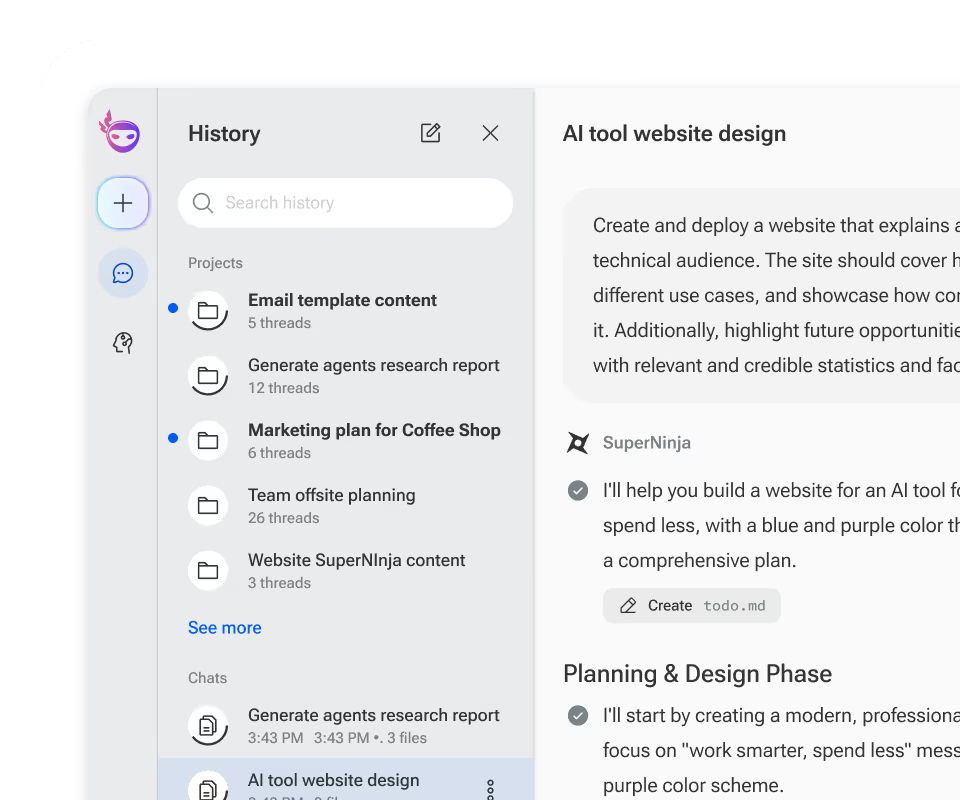

What Is SuperAgent?

We previously introduced our SuperAgent, a powerful AI system designed to generate better answers than any single model alone. SuperAgent uses inference level optimization, which involves combining responses from multiple AI models. This means that instead of relying on a single perspective, SuperAgent utilizes a mixture of models and then refines the output using a critiquing model to deliver more comprehensive, accurate, and helpful answers. The result is a level of quality that stands above traditional single-model approaches.

The SuperAgent is a natural extension of our multi-model feature and our belief that you should have some choice in which model you use. Building on the foundation we created for our Pro and Ultra subscribers, SuperAgent takes things further by aligning these models together, seamlessly. This means that instead of just choosing a model, SuperAgent brings them together to deliver the most comprehensive, nuanced, and optimized responses possible.

We built three versions of the SuperAgent to balance speed, depth, and cost.

SuperAgent Turbo

For lightning-fast responses. Turbo delivers instant responses using our custom in-house fine-tuned models and is available to all subscribers.

SuperAgent Nexus

The most robust version of SuperAgent, it delivers thoroughly researched and comprehensive responses. It combines multiple flagship AI models for expert-level insights and is available to Ultra and Business subscribers.

SuperAgent-R 2.0

For complex problems that require advanced reasoning. SuperAgent-R is built on DeepSeek R1 distilled on Llama 70B, and is available to Ultra and Business subscribers.

Why We Tested SuperAgent Against Industry Benchmarks

To evaluate the SuperAgent’s performance we conducted state of the art testing against multiple foundational models like GPT-4, Gemini 1.5 Pro, and Claude Sonnet 3.5. Benchmark tests like this are a common practice in computer science and helps us evaluate how our approach to AI compares to the single-model approach.

Here are the benchmarks we used:

Arena-Hard-Auto (Chat)

A benchmark designed to test complex conversational abilities, focusing on the ability to handle intricate dialogue scenarios that require nuanced understanding and contextual awareness.

MATH-500

A benchmark aimed at evaluating an AI’s mathematical reasoning and problem-solving capabilities, specifically focusing on complex problems that involve higher-level mathematics.

Livecodebench (Coding)

A coding test that measures an AI’s ability to understand and generate code. This benchmark assesses the model’s capacity to write accurate code in response to a variety of prompts, including basic and intermediate programming challenges.

Livecodebench Hard (Coding)

An extension of Livecodebench, focusing on advanced coding tasks that involve complex problem-solving and algorithmic challenges. It’s designed to push the limits of an AI’s coding skills and evaluate its ability to manage more difficult programming scenarios.

GPQA (General Problem-solving and Question Answering)

A benchmark that tests an AI’s general reasoning abilities by requiring it to answer questions involving complex, multi-step logic, factual recall, and inference.

AIME2024 (Advanced Inference and Mathematical Evaluation)

A benchmark focused on advanced reasoning and mathematical evaluation. It assesses the model’s ability to handle problems that require both logic and numerical computations.

These benchmarks represent a comprehensive, industry-standard way to evaluate various aspects of AI performance, allowing us to evaluate SuperAgent's capabilities compared to standalone models.

SuperAgent Outperforms Foundational Models on Arena-Hard

As we've mentioned, SuperAgent delivered outstanding results compared to all foundational models in multiple benchmarks. Let’s take a closer look at Arena-Hard with no-style control, one of the most crucial benchmarks for assessing how well an AI system handles common, everyday tasks. This benchmark is essential for understanding practical AI performance, and SuperAgent excelled, demonstrating capabilities far beyond those of other leading models.

The results: SuperAgent beat all other foundational models as measured by Arena-Hard.

Arena-Hard

We want to highlight that Ninja’s SuperAgent outperformed OpenAI’s o1-mini and o1-preview - two reasoning models. This is very exciting as o1-mini and o1-preview are not just AI models, they are advanced reasoning systems that, in general, are not compared to foundational models like Gemini 1.5 pro or Claude 3.5. For Ninja to perform better than two reasoning models, proves that the SuperAgent approach - combining the results from multiple models using a critiquing model - can produce superior results to a single AI system.

SuperAgent Excels On Other Benchmarks

Beyond Arena-Hard, the Apex version of Ninja’s SuperAgent demonstrated exceptional performance in math, coding, and general problem-solving. These results highlight SuperAgent's outstanding capability to tackle complex problems, showing advanced logic and precision compared to other models. Its ability to generate accurate and functional code consistently outperformed other models tested.

LiveCodeBench - Coding

LiveCodeBench - Coding - Hard

AIME2024 - Reasoning

GPQA - Reasoning

Math - 500

Across all benchmarks, SuperAgent showed a level of performance that surpassed many well-known foundational models - sometimes beating the most advanced reasoning models on the market.

Final Thoughts

The results speak for themselves—SuperAgent is a leap forward in how we think about AI-powered solutions. By leveraging multiple models, a refined critique system, and advanced inference level optimization, SuperAgent delivers answers that are deeper, more accurate, and more relevant to your needs. Whether you need a complex coding solution, advanced reasoning, or simply the best possible conversational support, SuperAgent has proven it can outperform traditional single-model approaches.

As we continue to innovate, our commitment remains the same: delivering the most intelligent, efficient, and powerful AI system possible—because better answers means a better experience for you.